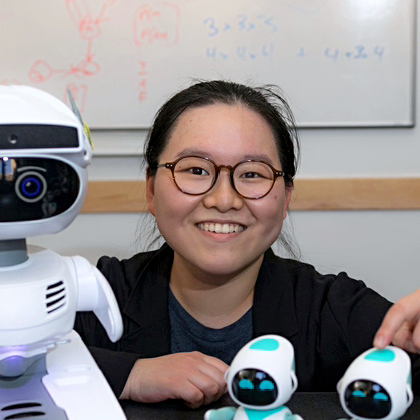

In recent years, Large Language Models (LLMs) have become the focus of intense interest in the AI community and their use in interactive robots for academic research and commercial products has had equal interest; however, there do not currently exist guidelines for or categorizations of their use in various application spaces in Human-Robot Interaction (HRI).

This workshop invites academic researchers and industry professionals who are actively using or are interested in using LLMs for HRI, and who can contribute to the development of high-level, community-wide guidelines for how LLMs can fit correctly and defensibly into the future of HRI research and development.

Relevant topics to this workshop will include HRI studies that either directly or indirectly involve LLMs, as well as HRI studies that utilize the idea of “Scarecrows” (i.e. using LLMs to provide placeholder functionality, similar to Wizard-of-Oz studies) within a larger HRI system; however, we also encourage broader questions and contributions regarding how these models should be conceptualized within frameworks for effective, responsible HRI.

We invite research regarding contributions, commentary, and questions about (and combinations of) the following topics of interest:

- the impact of "stubbing out" software modules as "Scarecrows" (traditionally done with humans in Wizard-of-Oz contexts) by using LLMs when building and testing larger HRI experiments;

- opportunities and applications of LLMs in HRI;

- risks and perils of LLMs in interactive robots;

- reporting guidelines, ethical considerations, or real-world implications of LLMs in HRI;

- safety of LLM-driven interaction, and fine-tuned LLMs during interactions with the world or users; and/or

- position or framing papers on the role of LLMs in HRI.